How the RTX 3090 Actually Works: GPU Architecture notes...

I spent some time watching Branch Education's video on how GPUs work, specifically the RTX 3090, and took detailed notes. Figured I'd clean them up and share what I learned about the GA102 architecture.

The Hardware Breakdown

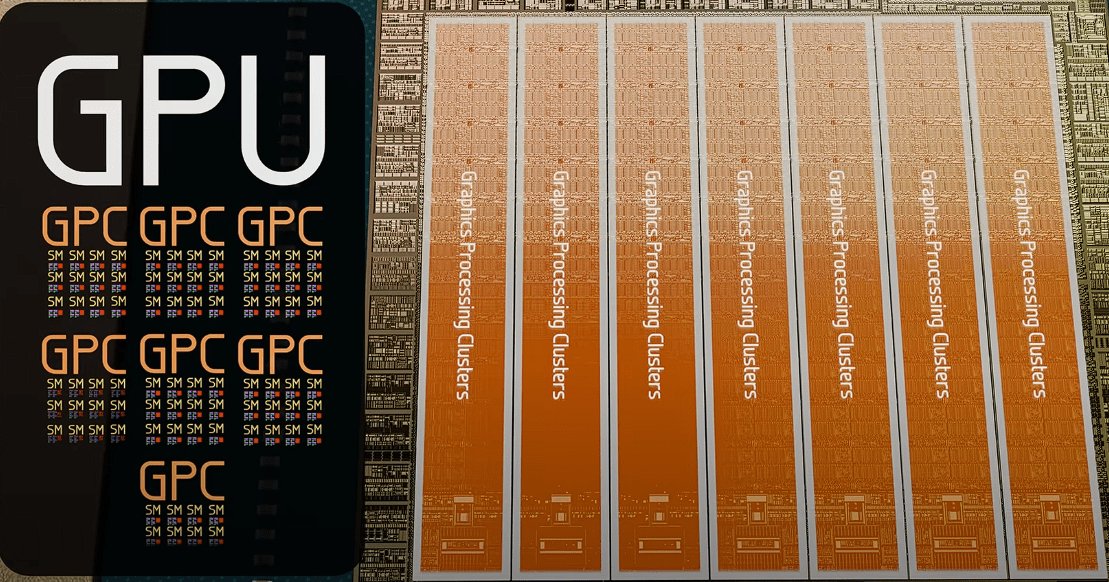

We're looking at GA102, which is the 3090's GPU processor architecture.

The Hierarchy

The architecture is organized in layers:

- 7 GPCs (Graphics Processing Clusters) at the top level

GPU architecture showing the 7 GPCs (Graphics Processing Clusters)

- Within each GPC, there are 12 SMs (Streaming Multiprocessors)

Internal structure of a Streaming Multiprocessor (SM)

- Inside each SM, there are 4 warp schedulers and 1 Ray Tracing core

- Inside each warp, there are 32 CUDA cores (shading cores) and 1 Tensor core

Total Core Count

Across the entire GPU:

- 10,752 CUDA cores

- 336 Tensor cores

- 84 Ray Tracing cores

Around the Edge

The chip's periphery includes:

- 12 graphics memory controllers

- NVLink controllers

- PCIe interface

- 6MB Level 2 SRAM cache at the bottom

- Gigathread Engine that manages all 7 GPCs and the streaming multiprocessors inside

Inside Each SM

Each streaming multiprocessor contains:

- 128KB of L1 cache/shared memory (configurable split)

What Each Core Does

CUDA Cores

Can be thought of as simple binary calculators - they handle addition, multiplication, and a few other basic operations.

Tensor Cores

Matrix multiplication and addition calculators. They're used the most when working with geometrical transformations and neural networks.

Ray Tracing Cores

The fewest and the largest cores. They're specially designed for ray tracing algorithms.

Key Terminologies

FMA (Fused Multiply-Add)

The operation A × B + C. This is a fundamental calculation that gets used constantly in GPU operations.

SIMD (Single Instruction, Multiple Data)

GPUs solve embarrassingly parallel problems using SIMD - applying one instruction to multiple data points simultaneously.

SIMT (Single Instruction, Multiple Threads)

Basically SIMD but adds a program counter, which avoids conflicts from dependency and branching of operations.

Computational Architecture → Physical Hardware

Now that we understand how SIMD/SIMT works, here's how the computational architecture maps to the physical hardware:

- Each instruction is completed by a thread

- A thread is paired with a CUDA core

- Threads are bundled into groups of 32 called warps

- The same sequence of instructions are issued to all threads in a warp

- Warps are grouped into thread blocks, which are handled by a Streaming Multiprocessor (SM)

- Thread blocks are grouped into grids, which are computed across the entire GPU

All these operations are managed and scheduled by the Gigathread Engine, which maps the available thread blocks to the streaming multiprocessors.